1. Introduction to AI in Web Development

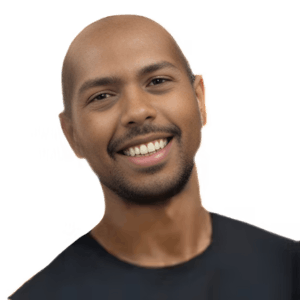

Thanks for coming! I'm Wes Boss, a Canadian with Dutch blood. I make web dev courses and host the Syntax podcast. This talk is about coding and building with AI models. AI gives developers a boost in productivity and code quality. Let's discuss the mediums we use AI as web developers, like GitHub Copilot and CLI.

Thanks, everybody, for coming out. I'm super stoked about this talk. My name is Wes Boss. I'm a Canadian, however, I have 100% Dutch blood. So if you want to come up after and teach me some sort of Dutch word, I would actually really like that. I make web dev courses. I have a highly awarded podcast called Syntax, which I'm pretty stoked about. And this talk today is about coding with AI as well as actually building stuff with these AI models.

And I want to start off with a few things. I know there's a couple of you in the room are like, oh, this jerk off that's talking about AI right now, right? I have nothing here to sell you. I don't work for some AI company. I don't have any crazy stuff. Well, if you're that stoked about it, I might try to sell you something later. So don't get too excited. I'm not a huckster for trying to push it. I'm not a Web3 crypto guy that figured out it didn't work out. I'm just stoked about a lot of this AI stuff that's going on, and I'm here just to tell you stuff that is cool.

So AI, I think it gives us developers a massive boost in productivity, code quality, as well as the kind of stuff that we're able to build. We're still figuring a lot of this stuff out. This is just, like, six months a year of things going crazy. But I don't think it's going anywhere. I always compare it to the crypto stuff, and I'm like, oh, I'll keep my eye on that and stuff like that. And that stuff was never really that useful to me, but this stuff is just like, wow, I can't stop using this stuff in my day to day.

So let's talk about some of the mediums that we use AI as web developer. So probably the biggest one. Who here is using GitHub Copilot or something like that, right? Yeah, probably about half the room here. And it's super handy. As you're writing code, it kind of just says, is this what you want? And you hit it. We also have CLI. Oh, shit, there's supposed to be a video there.

2. AI in Web Development Tools

Imagine using GitHub Copilot or Fig for CLI commands and chat apps for quick answers. Voice input and prompts are being explored. AI can generate boilerplate code and provide context-aware solutions. It solves your problem directly. For example, generating dummy data is super handy.

Just imagine something like GitHub Copilot or Fig where you have a CLI command and it gives you access and then shows you what all the CLI commands are. It's pretty handy. There's chat apps which probably a lot of us have also interfaces with where you just ask it, how do I do X, Y, and Z? There's pull request templates now. You spend all this time working on your code and then you have to create a pull request. It's sometimes hard to figure out all that good stuff is.

And there's so many different experiments that we have. There's voice which GitHub Copilot voice which is kind of cool. It's huge for accessibility, but it's also kind of interesting, is your voice a new input? You get your mouse, you get your keyboard, is your voice a new input? We don't know. We're experimenting with it. There's brushes where you can just have to click a button, make it accessible, document this, add types. Prompts as the new syntax. So there's a lot of talk around, like, do we write code anymore or do we just write prompts? And obviously not yet. But there's people trying to explore maybe that is something. Maybe it's not. Boilerplate generation for these things. Do you need boilerplate generators anymore or can AI do that where it's a little more well-suited?

So like why do you want to use these types of things? So first, it's faster than Google. It's context-aware, which is really the game-changer, is it knows about the code that you're writing. It knows about the types of your project. It knows about, like, what tabs you have open at the time. And all of that context really helps when you're trying to find an answer. It solves your problem. It doesn't solve somebody else's problem and you can't say, oh, this dude on StackOverflow solved the problem this way. How do I, like, apply that to what my problem is? It goes directly for your problem. So let's look at some examples. Dummy data. Generating dummy data is super handy. So this is, ah, shoot. What's going on with the... Hold on. This is not gonna be good if this doesn't work.

3. Technical Difficulties with Wi-Fi Connection

Sorry, folks. I'm having some technical difficulties. Let me figure this out. It seems to be a Wi-Fi issue with codehike.org not getting the grammars.

Um, let's see here. Sorry, folks. Sorry, folks. Where did my thing go? Uh-oh. Shoot. Sorry, folks. One sec. Let me figure this out. I've got to take my award down. Um... Should we debug it live? Oh, no. Failed to fetch. You crap. Is it the Wi... It's local, though. Let's try. It's like... Which one is that? Can you just come up and show me? Oh, it's definitely the Wi-Fi. I'm seeing the first error is codehike.org is not getting the grammars. Sorry. I connected to my phone before this.

4. Using AI for Dummy Data and Reusable Code

Is this how much time I have left? Oh, they told me 20 minutes. We got extra time. So dummy data is handy for creating examples. AI can generate types and stick them right into your code. You can select elements on the page and write a function to paint the video frame to canvas. Make it reusable by turning it into a class and generating CSS for your HTML.

Is this how much time I have left? Is this how much time I have left? Oh, they told me 20 minutes. We got extra time. This is awesome. I will give you two more minutes. I appreciate that.

All right. So dummy data. Give me an array of people. Each person first and last. So basically I'm just rambling what I want. Much like a project manager. And on the other end they give you types and actual data. And this is so handy for, especially for someone like me who creates a lot of examples and you want real data in there. A lot of people use Faker JS. This is way better than Faker JS. In this case, take this code and add types. So you can often infer a lot of types, but AI can also generate them and stick them right into your code. Do the work. So you select a couple elements on the page and you say write a function to paint the video frame to canvas as frequently as possible. Give the entire output in type script and bam. At the other end you get that sort of boilerplate code.

Make reusable. This is something I do a lot. I'm just like hacking on like a demo. You're making functions here or there and okay, it's working. Now I need to go back and clean it up. Like how many times are you like, okay, it works but I gotta clean it up yet, right? So you just make it into a reusable class and doo-doo-doo-doo. It makes it into a beautiful, reusable class and uses best practices and whatnot. Generate the CSS for you. So I have this HTML.

5. Using GitHub Copilot for CSS Selectors and Regexes

GitHub Copilot chat generates CSS selectors and regexes. It explains regex breakdowns and writes tests. Test-driven development with AI saves time and provides updated code. It's not perfect, but it can save you at least nine minutes.

This is a really handy use case for the new GitHub Copilot chat. You can say, select this HTML and say, given this selected HTML, write me a bunch of generated CSS selectors between X and Y and use this term. Write regexes.

This one is so helpful. I hate writing regexes. So you just give it some examples of what you want it to do and say, all right, here's a regex and like, how do you know that that actually works? I don't know a whole lot about regex, well, explain it. You come back to this regex in a couple months. You can ask it, okay, break it down, explain what each of these specific things do. Also, go ahead and write a few tests for the regexes that we have there so you know that if you ever have to monkey with it or ever change what it is looking like, your tests are going to fail. And by the way, I did run all of those tests. And it's obviously not a major example, but I ran it through a testing library and it worked right away.

Test driven development is write a function that satisfies these tests. I said, all right, I'm just going to imagine an API of take I like oranges and swap oranges with bananas, right? And then the other end, it's going to give you a function that returns an object that has an and swap method inside of it. Then if it doesn't work right away, you can say, all right, well, thanks, AI, but I've got this error. And you can have this recursive work with it where you say, okay, it didn't work. This is the error I got and it will run it again and give you some updated code. At a certain time, I was starting to run into circles with it where it's not perfect. I'm not going to stand up here and say this is going to code everything for you. But it's certainly going to save you nine minutes, at least, on this type of stuff, you know?

6. Integrating AI into Applications

Convert code to promises and sinks. It handles complex inputs and converts English to CLI alternative. GitHub Copilot's CLI tool saves time by installing dependencies. Let's explore integrating AI into applications.

Convert it to promises. So it just chained it to then. And I said, okay, well, convert that to a sink away. Boom, out the other way. And then at that time, I said okay, but only get photos of weather is required to wait for get weather. The rest of them can be run concurrently. And at that point, it didn't know what to do. It just kept giving me the wrong answer over and over again. But that's not to write it off. That's to say, oh well, that got me 73% of the way there.

It's really good at complex input. So how many of you have ever had to use FFmpeg before? It's awful, right? It's amazing, great tool, but there's a lot of complex inputs with it. Look at all these Vs and As and colons and things like that. And it's really good at taking English input or whatever language and converting it to the CLI alternative. Explain it to me. So if you don't know what those things are, again, it's going to break it down into the pieces and say, this piece means X, Y, and Z.

I use this one quite a bit. This is really cool. So if you are looking at a demo of some code, you're saying, oh, I want to run that but I don't have the dependencies installed. So instead of you copying, pasting every import or require, flipping over to your terminal NPM install, you just use the question mark, question mark is GitHub copilots CLI tool. So you say, install these. And then it will boom. And it will say, all right, I think you want to NPM install the following things. Again, that's just a quick time saver that you can use rather than having to copy, paste them all out. I could show you examples all day but let's flip to the other side and look at how—oh, that's annoying. My cursor is on top of that. Does that bug anybody? You know what also bugs me? Is that this is not import. The require—we'll get that changed next year. So let's look at how you can integrate AI into your application. So I run a—alright, we can put it back up now.

7. Using AI to Enhance Podcast Experience

I run a highly awarded podcast called Syntax. We're looking at how to integrate tools into a better experience to surface podcast content in different ways. We use a service that transcribes the podcast and provides JSON data for each word, including timestamps and speaker information.

So I run a—alright, we can put it back up now. I run a highly awarded podcast called Syntax. Oh, is it the wrong way? Oh, shoky doodle. All right. And we're looking at, like, okay, how can we use these tools to integrate into a better experience so that we can surface the content that is inside of the podcast a bunch of different ways? To people, maybe you don't want to listen to an entire podcast, but—or maybe you need to be able to find where in the podcast you talked about it. That's my Twitter DMs all day long. Wes, in what episode did you talk about X, Y, and Z? What was the thing that you used to delete your old NPM modules, right? So we use this service. There's lots of different models out there that will transcribe it. get is some JSON data for every single word—when it's been started, when it's been ended, who the speaker actually is, and all kinds of other really good data about it.

8. Formatting AI Output and Condensing Transcripts

What we want from the AI is formatted as JSON. We can confidently parse the input as JSON. We summarize the podcast transcript into succinct bullet points. Models have token limits, and a one-hour podcast exceeds accessible AI limits. Condensing the transcript is the solution. It brings down the input without losing any details. We then write a prompt to summarize the transcript and create additional information in JSON format.

So what we want from the AI is formatted as JSON. This is really cool. You can tell. Sometimes it takes a little bit of coercing. But you can tell these AI models—ChatGPT and whatever. You can say, okay, I want this data, but give it to me formatted like X, Y, and Z. So you can say, give me the output formatted as JSON. And I've got it to a point where I can confidently JSON.parse the input from the AI. I've still RapidTry catch around it, just in case. But probably running it 300 times, it's never not given me straight up JSON.

So we have the transcript. Then what we do is we say, summarize the podcast transcript into very succinct bullet points each containing a few words. So the actual prompt to that is much longer, takes a little bit of time, but basically you do, you give it the timestamp, you give it the speaker and you give like... They're called utterances, things that were said. However, there's a bit of a problem in that these models only are allowed to take a certain amount of input. It's called tokens. And a token is kind of like a word, but it's a little bit different and periods and quotation marks also are tokens as well. So a one-hour podcast is 15,000 tokens and that's beyond the limit of most accessible AI. So GPT 3.5, that's 4,000 token limit, four is about 8,000 tokens. Then there's a couple of these last three are not accessible to most mortals right now. Anthropic is saying they're going to allow you to use 100,000 tokens, which is going to be wild because you could literally send it your entire codebase, well it depends on how big your codebase is, but you can send it quite a bit of context for it to actually understand how it works. But here we are, even if we've got the money for GPT 4, you only have 8,000 tokens and that includes the reply that it's sending you. So in reality you can really only send it 6,000, we have 15,000. So the answer to AI not being able to fit it is AI, which is kind of scary that the answer to a lot of AI problems is also AI but the way that it works is you condense or you summarize what you have. So we take the input transcript which is how it came out of my mouth and we say, please condense this to be about 80% short or 50% short or whatever but do not give up any details, right? Don't give up any details and surprisingly I have a lot of filler words that I say and it can do a really good job at bringing it down to 50, 30% of the actual input without getting rid of it. I just kept reading through them, I'm like, yeah, it didn't really leave anything out. At that point you have the transcript that's been condensed. Every utterance is smaller without leaving out any information and then we write this massive prompt that says summarize the provided transcript into succinct, blah, blah, blah. Additionally, please create the following for the episode, one to two sent to the subscription, tweets, blah, blah, blah, all kinds of information. Return each of them in JSON so that it looks like that.

9. Crafting a Request and Getting the Result

If you have feedback or clarifications, please put it in the notes. Craft a request to your model, providing the podcast details and the entire prompt. Fit it into about 6,000 tokens, leaving 2,000 tokens for the response. The result is amazing, providing the title, description, and detailed summary of each topic discussed in the episode.

Kind of the key here is that if you, this one took me the longest time, remember I said that JSON parse thing, because it would always be like, okay, here's your result. I'm like, no, don't say anything. Just give me the JSON. Come on, you know, and I don't want to regexit or whatever. So I finally landed on if you, the AI, have feedback or clarifications, please put it in the notes. And I give him his own little spot. And sometimes, it's like sometimes it has stuff to tell me, but it puts it in the notes section, which is really nice of it.

So once you have that massive prompt, you craft the request to your model. We're using OpenAI here, but I guarantee this is going to bounce around. What we're using is going to change over the next couple of months. So basically, you tell the system what it is. You say the syntax is a podcast about web development. This is episode number 583. It is an entitled whatever. Then you give it the entire prompt I just showed you in the last slide. And then finally, that entire condensed prompt that we now have in a much shorter. So generally, try to fit that into about 6,000 tokens, leaving yourself about 2,000 tokens for the response.

Then back from the result, it's pretty amazing what you get. So it says, this is the title. Can vanilla CSS replace SASEA? A deep dive. Description. It does a really good description of what it is. Topics. You ask it to say, group them into a bunch of topics. Then we get a detailed summary of every single topic or thing that we talk about through the entire episode. So it gives you timestamps of where it started. As well as it kind of goes into it. And that one was a little bit tricky because sometimes it would blaze over, like, the personal stuff, or the tangents that we would have went on. So you'd have to, no, keep tangents in. But once we got it down, it did a really good job, and honestly, quite better than a human could do figuring out where are the major topics inside of this.

10. AI-Generated Tweets and Embeddings

This is entirely AI generated off of my voice. We can create tweets for people who want to promote the podcast but struggle with descriptions. The AI can figure out the speaker's time and even identify guests' names. It can also recognize website references and find the associated URLs. Embeddings is another tool that allows us to ask questions about specific topics discussed in the podcast episodes. The AI can provide a summary of the topics based on show numbers. The AI doesn't have prior knowledge of the transcripts but converts each utterance into an embedding.

So this is entirely AI generated off of my voice. Tweets. All right, let's try to figure out what the tweets are. Sometimes we have people who want to tweet out about the podcast, but they don't necessarily know how to describe it, or come up with something good. So it's actually pretty good that you can create five for us to tweet out, as well as five for the actual listeners.

Speaker time. So we could write a reduce to figure it out, but you can also just ask the AI, all right, well, you know about the entire transcript. You know where each utterance starts, so add them up. And if there's guests on there, it will try to... It's actually really good at figuring out guests' names based on, we're like, hey, today we have Josh on, and it will figure out what the person's name is.

Websites reference. This is amazing, is that any time we reference a website, not saying www.whatever, but any time you just say, oh yeah, check out the docs, or check out the site, it will pick up that we're talking about a specific website and go out and try to find the URLs. It's not perfect, but it's probably 93.8% perfect.

Embeddings. This is a cool one, because a lot of people just have experience with the chat and GitHub copilot and that's about it. So the third one is embeddings. This is not something that you're gonna be using via those tools, at least not yet. So this is another tool that I came up with, where it was kind of like a ask questions about syntax. Wes, what did you talk about? What was the tool that you used to get the rest of the Nutella out of the jar, right? There's all these weird things that we talk about on the podcast, and it's sometimes hard to figure out which of the 600 episodes was that on. So we would just ask it questions. In this case, I just said DIY tools, cause I was trying to see if it could know the difference between JavaScript tooling and home tools. And it did a really good job at it. So I said, here's the highlights. And you tell it, please reference the show numbers where you actually found it. And it can do a really good summary of the topics that we have talked about. So the question is, how does it know about the 600 plus hours of transcripts that we have? And the reality is, it doesn't. We're not training some sort of special input model. What we do is we take every single utterance that we've said and we convert it into an embedding. So here's an example. I just said, I went on Twitter.

11. Analyzing Embeddings and Similarity

I asked people what they did today and received responses about lots of meetings. By converting these tweets into embeddings, we can determine their similarity. The AI understands the context and generates embeddings based on it.

I said, tell me what you did today. And people replied. And two people said, lots of meetings, and meetings, meeting, meetings. I took those two tweets, converted them into embeddings. That's what those numbers are. They're vectors. And you can figure out how similar are these two things? Same thing with this one. This is 82% similar. But if you look at the words, there's really only the word component and maybe menu that overlap. It's not text overlap. It's the AI understands what the person is talking about, and then will generate an embedding based off of that.

Comments