1. Introduction to Performance Measurement Tooling

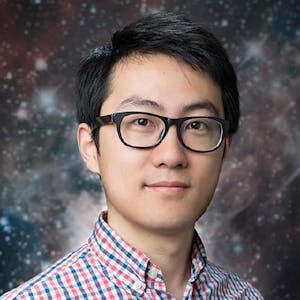

Hello, everyone. My name is Filip Rakowski, the CEO and co-founder of Viewstorefront. Today, I will be talking about performance measurement tooling and how it has evolved over the years. In the past, optimizing performance was challenging, and only a small group of developers knew how to measure and optimize it. However, with the introduction of Google Lighthouse in 2018, performance measurement became more accessible. The rise of progressive web apps (PWAs) also emphasized the importance of speed in mobile browsing. Now, performance is widely recognized as a crucial aspect of web development.

Hello, everyone. My name is Filip Rakowski. I'm the CEO and co-founder of Viewstorefront. If you don't know what Viewstorefront is, let me quickly explain it. Viewstorefront is an open source tool allowing to build amazing storefronts with basically any e-commerce stack. So no matter what problems you could encounter while building e-commerce storefronts, we probably have a tool for that. So orchestration, caching, design systems, we have it all.

And you can learn more about the project, or give us a start, because we are open source, by checking our repository on GitHub. You could also know me from a very well-known series on ViewSchool, about optimizing Vue.js performance. And today I will be actually speaking about the topic, and to be more accurate and precise, I will be talking about performance measurement tooling. So there is a lot of misconceptions around the topic, because the way how the performance is being measured these days is definitely not straightforward to understand. Yet people use these tools and, without knowing exactly how they work, it's very easy to conclude false information from the output. So let's explore this topic in depth.

Believe me or not, but just four, five years ago, optimizing performance of web applications was something very exotic. Only a small group of developers really knew how to measure it, and only the chosen ones knew how to optimize. Even though we already had some great tools to measure the performance, most of us had no idea about their existence. And optimizing performance meant usually following a bunch of best practices for majority of us, maybe measuring the load event, but that's it generally. And only really big players and governments, they were taking proper care of that. And I think the reason of that was simple, I think it was just too hard for majority of developers to effectively measure performance. And also, there were no tools that were giving very simple-to-understand information.

Also the knowledge about the business impact of good or bad performance, it was not so common in the industry, and it was not so common for business owners. So everything has changed in 2018 when Google introduced Google Lighthouse. It was the first-ever performance measurement tool that aimed basically to simplify something that recently was complex and very, very hard to understand. At the same time, it started becoming obvious to everyone that, you know, the preferred way of consuming the web for majority of users is basically through mobile devices like tablets or smartphones, mostly the latter one. And this accelerated the popularity of a term, progressive web app, which was first introduced, I think in 2015. And PWA's goal was basically to bring a better mobile browsing experience to all users, despite their network connectivity or device capabilities. And one of the key characteristics promoted by PWAs was speed. Of course, all of that was followed by very aggressive evangelization by Google on events all around the world, YouTube, etc. But this is a good thing because performance is super important. And we were blind, now we see everything.

2. Google Lighthouse and Metric Scores

Let's dive into Google Lighthouse, a performance measurement tool that provides detailed information on how well a webpage is performing. It assigns a score between 1 and 100, with scores below 50 considered bad and scores above 90 considered good. Each metric has a weight that reflects its impact on the overall user experience. Google uses real-world data from HTTP Archive to determine the range for good, medium, or bad scores.

So let's start today's talk with a very deep dive into Google Lighthouse. I am pretty sure that most of you used that tool at least once in your professional career. And it's hard to be surprised. This audit is available through browser extensions, external pages. It can also perform directly from the Chrome DevTools. Just click on the Audit tab, run the test on any website, and voila, it will give you a very detailed information on how well your page is performing against certain metrics, aiming to describe the real experience of your users as accurately as possible.

So Livehouse basically boils down performance of a website to a single number, between one and one hundred. So it could also be treated as a percentage, where its score below 50 is treated as a bad, and score above 90 is treated as good. I will come back to this topic, because it could be surprising that only 10% is treated as a good one. But, okay, going back to the topic. Where do these magical numbers come from? So it turns out that each metric has its own weight that corresponds to their impact on overall experience of end-user. And as you can see here, if we improve total bocking time, largest content will paint or cumulative layout shift, we will improve our Livehouse score much faster than optimizing other metrics. Of course, it is important to focus on all of them, as each is describing different piece that zooms up to overall experience of your users, but there are some metrics that are identified by Google as more important than others, in a sense that their impact on the user experience, the perceived user experience, is bigger. Of course, everything is subjective, but this is what the data says, so we have to believe.

OK, so we know how the Google Livehouse score is being calculated overall, but what we don't know is basically how the score of individual metrics is being calculated. What do I mean by this? So let's say we have a total blocking time. How do we know that one second is a good or bad result? From where? We know this. And Google is using a real-world data collected by HTTP Archive. And if you don't know what HTTP Archive is, I really, really encourage you to check it out, because it's just awesome. It contains a lot of useful information about the web in general and how it's being used from both real users and problems. And it can help you understand what is important and how your website performs against others in your or different fields. And I am warning you, some of the information you'll find there could be really, really depressing or at least surprising. For example, my favorite example, actually, you can learn that it's 2021 and the average amount of compressed, I repeat, compressed JavaScript data shipped by like a statistical website, it's almost 450 kilobytes on mobile. 450 kilobytes of compressed data on a mobile device. That's a few megabytes of uncompressed JavaScript. For mid or low end devices, if you result in a really, really, really bad experience and loading time, poof, it will allow you to make a coffee, come back and still wait until your website is ready. Really, like there's a lot of examples where you can see that some page is loading 13, 14, even 15 seconds on a mobile device. Would you wait that long? I don't think so. OK, going back to the main topic. Based on the data for each metric from HTTP Archive, Google is setting the range for good, medium or bad score.

3. Lighthouse Score and Metric Changes

Remember the Lighthouse score? Scoring in the bottom 90% of the range is treated as bad or medium score, because just 90% of the website is bad or medium. Both metrics and their weights in Lighthouse change over time as Google learns new things. The score is more accurate with each version, measuring the real-world impact of different metrics on user experience. Comparing overall scores over time is misleading, as the algorithm may change. Instead, focus on comparing individual metrics and consider the Core Web Vitals as vital measurements for website performance.

Remember the Lighthouse score? So for each metric, also the top 10% is perceived as good results and the bottom 50% is perceived as bad, everything between medium result. And you could say that classifying only top 10% of all websites as good is not enough. But if you think a little. And especially think a little about the previous slide, you will quickly realize that basically majority of websites is just extremely slow. Hence, scoring in the bottom 90% of the range is treated as bad or medium score, because just 90% of the website is bad or medium.

And your score in Lighthouse for each individual metrics is determined by your position on the chart. If you're on the top you're getting 100%, if you're at the bottom you're getting 0. Simple.

There is one important thing that everyone using Lighthouse need to be aware of, though. Both metrics and their weights, they change over time. They are not persistent. Why? Well, obviously, over time Google learns new things. They learn new things about the impact of certain metrics and behaviors on user experience and they periodically update the Lighthouse scoring algorithm to be more accurate. And with each version the score is more accurate, he measures and describes the real world impact of different metrics on user experience. And it's really not easy to come up with good metrics and weights because we can measure performance from really many different perspectives and figuring out how each of them influences the overall experience is just super hard. This is why we're having Lighthouse 7 and we'll probably have Lighthouse 17 as well. So here's an example of the changes between Lighthouse 5 and Lighthouse 6. As you can see not only weight cache change but also two metrics from Lighthouse 5 were replaced in Lighthouse 6. Since Lighthouse 7 it seems that we have already established a good set of metrics to measure the overall performance but weights they are still being adjusted. It's important to keep that in mind that the score is changing because for example if you benchmark your website today you save your performance score, let's say it was 60, and then benchmark it in a year from now and it will be 70. Does it mean it was better? Does it mean it was worse? It doesn't mean anything because the algorithm could have changed during that time. It could improve or decrease because the algorithm has changed, not because you improved something or you failed on something else. This is why this kind of comparison only makes sense when you are comparing the individual metrics. I really encourage you to do that, to save that. I will also tell you a bit about the tool that actually automates that. Never, never compare the actual score because it's super misleading, and over time also we'll identify that from all these metrics that we know, there are three that are kind of like a minimal set of measurements that is vital for the website performance. And they call them Core Web Vitals, which is a pretty accurate naming. And Google describes them as quality signals, and I think that's the perfect description actually, because there are no metrics that will tell you that the website is delivering good or bad experience. This is not something that you could, you know, just measure. And we should never follow them blindly, this is just a signal.

4. Introduction to Core Web Vitals

Each metric and testing tool provides a signal about the quality of a website. The first Core Web Vital is Largest Contentful Paint (LCP), which measures how long it takes for the largest content to appear on the screen. LCP is crucial for loading performance, and a good LCP is below two and a half seconds.

And each metric and testing tool is always giving you just a signal about the quality of a website, nothing else. So coming back to this, we have three Core Web Vitals. The first one is called Largest Contentful Paint, which basically measures how long the website takes to show the largest content on the screen above default. And it could be either an image or a link or a text field, really, that doesn't matter. And this metric describes loading performance because it tells you how long it needs to wait until the biggest part of the UI shows up. If this definition is not telling you anything, let's see it on the example. So on the first image we see that LCP is the image in the middle, and that appears on the fifth frame. Assuming each frame is one second, our LCP is five seconds. Yeah? Understandable. On the image below, we see that this is basically this Instagram image, and that our LCP is after three seconds. A good LCP is below two and a half seconds, and bad is above four seconds. So we can quickly conclude that there was no good LCP in the previous examples.

5. Performance Metrics and Core Web Vitals

First Input Delay measures the time users need to wait for the first response of their action. A good value is below 100 milliseconds, as after 100 milliseconds, users may perceive a delay. Cumulative Layout Shift measures visual stability, with a good score being less than 10% layout shifts. Core Web Vitals are a minimum set of quality signals for perceived performance. Lighthouse score has limitations, such as not distinguishing between single-page and multi-page apps, and not providing insights on heavy loads or client-caching performance.

Another metric that measures the responsiveness of the website, so we have loading, non-responsiveness, is First Input Delay. And in short words, it's the time that user needs to wait until they see the first response of their action. And I'm sure you saw that many times. You're entering the website, you're clicking on the link that appears in the viewport, but the website is still being loaded, so you have to wait a little bit until the navigation to another page really happens, or some model window is being opened, or some other thing happens. This is exactly the First Input Delay.

So it happens only if you have long tasks, they're occupying the main thread for more than 100 milliseconds. If you don't know what is the main thread, well, start reading by learning how the event loops work. It's really useful. Okay, coming back. So if user clicks on something, they need to wait for that task to finish and then the action happens. Good First Input Delay value is below 100 milliseconds. This is because according to studies that were made, 100 milliseconds is actually a threshold for perceived instant response. So after 100 milliseconds, user will notice that the action was delayed and everything below 100 milliseconds is treated as instant. It's pretty useful information.

And last but not least, cumulative layout shift measures visual stability. And it basically tells you if there are any changes in the layout during the process. So if the image has moved or text has moved. And CLS usually happens when you don't have placeholders for the part of the UI that loads dynamically, like images in the image stack for example. And here we see an example of the layout shift. So the Click Me button has appeared and because of that we needed to shift the whole green box. The good score for CLS is when less than 10% of your layout shifts during the loading process and the bad is when more than 25% shifts. And intuitively, I think it's right. So the FreeCore Web Vitals are a minimum set of quality signals for a good perceived performance measuring loading performance, page interactivity, and visual stability.

And I think it's already a huge, huge step forward since Lighthouse 1, where actually every page was scoring 100, and there was a lot of weird metrics, and we had a very naive understanding of what matters the most in a good user experience. But, you know, there is still room for improvement, and I also want you to be aware of that. For example, Lighthouse Audit, it will not show any difference between single-page application and multi-page application, even though the former has much faster in-app navigation. So even if both will have the same score, the perceived experience of your users will always be better on the single-page app, because the in-app navigation is much faster. Also, Lighthouse score will not tell you anything how well or how bad your website is performing under heavy loads, or how good your client-caching is, so how well they are managing the subsequent visits. It will only tell you if it was cached or not. But, you know, that's all we have right now, and I think it's definitely better than nothing, and it is pretty accurate, don't get me wrong.

6. Running Lighthouse Audit Locally

If you want to continuously measure performance for each release or with every pull request, I strongly suggest you to host the Lighthouse environment locally and use tools like Lighthouse CI, which is actually available as a GitHub action.

Just one problem. If you run a Lighthouse audit locally on different devices, you will almost always have different scores because it's influenced by many factors, like your computing power, your network connectivity, your browser extensions. Of course, you can apply trocking, browsing in Kognito, but it will not eliminate the whole problem. If you want to continuously measure performance for each release or with every pull request, I strongly suggest you to host the Lighthouse environment locally and use tools like Lighthouse CI, which is actually available as a GitHub action. It's a great tool and will automatically run Lighthouse audit on each pull request with just a few lines of code.

7. Lighthouse CI and LabData vs FieldData

If you run Lighthouse CI, it will give you a detailed view of the score and how it changed over time. LabData provides debugging information, while FieldData reveals real-world bottlenecks. The Chrome User Experience Report collects performance metrics from Chrome users and is available if your website is crawlable.

If you run Lighthouse CI, it will give you a detailed view of the score. For example, how it changed over time from the previous version, how the metrics have changed. You will see immediately if a certain version of the pull request contributes to better or worse performance. That's the most important thing. This is the main reason why it would be worth introducing Lighthouse CI into your workflows.

OK, but the solution is not perfect, because the fact that your website is running great in your control environment only means that it runs great in your control environment. Because if you're testing on high-end iPhones or MacBooks, you can usually expect good results. But not all of your users will have high-end devices and good Internet access. So there are basically two types of data. One is called LabData, and this is what Lighthouse is giving you, and what we were discussing until now. It's basically the data that comes always from a controlled environment, like your computer. But there's also another type of data called FieldData that, as the name suggests, comes from the wild. Also the image suggests that. And it's provided by real world users. And you actually need both. So LabData is mostly useful for debugging, and as we saw previously, we can see there results and improvement or regression in certain metrics after each release or after each pull request. And we can also very easily reproduce those results. The problem is that it gives us really no information about the real world bottlenecks of our website. And this is where FieldData shines.

To illustrate also why we need both Lab and FieldData, I want to show you two real world examples. So even though the Lighthouse score here at the top is terrible, like 80-90% of users is experiencing great performance. So the bars that we see in the middle, this is a FieldData. Another example, an actual opposite of that. So even though we have a pretty decent Lighthouse score at the top, 73 for eCommerce website is very decent, majority of the users experiencing bad for medical performance. So really this is just an indicator, and LabData is really not enough information to determine if our website is doing good or bad. So where we could find the FieldData then? Google launched something called Chrome User Experience Report, and that's actually exactly what the name suggests. So we must admit they're good at naming, like Hurley Pytel's, Chrome User Experience Report, all that stuff. So it's a report of real world user experience among Chrome users, and Google is collecting four performance metrics each time it visits a website and sends it to the CrUX database. By the way, you can disable it in Chrome if you want, but by default, this is turned on. And to have this data available for your page or website, it needs to be crawlable, so keep that in mind.

8. PageSpeed Insights and Lab Data

And this data from Chrome User Experience Report is available in PageSpeed Insights, Google BigQuery, and Chrome User Experience Dashboard in Data Studio. PageSpeed Insights is a performance measurement tool that runs the Lighthouse Performance Audit on your website but also shows you the field data collected from the Chrome User Experience Report. The lab data is just an indicator, and the data from PageSpeed Insights Lighthouse audit is coming from Google Datacenter. The lab data shows how well your website performs against colored vitals and first input, first concentration paint.

And this data from Chrome User Experience Report is available in PageSpeed Insights, Google BigQuery, and Chrome User Experience Dashboard in Data Studio. And I will not talk about the latter one, but I just strongly, strongly encourage you to check it out, because Chrome User Experience Dashboard in Data Studio is amazing. It is allowing you to create very detailed reports and you can specify what metrics you would like to see, how they changed over time, which devices for what types of users. It's super, super useful.

And today we will talk about the former one, which is PageSpeed Insights. So PageSpeed Insights. PageSpeed Insights is a performance measurement tool that runs the Lighthouse Performance Audit on your website but also shows you the field data collected from the Chrome User Experience Report. So PageSpeed Insights is kind of combining the two ways of getting the data that we have seen. It's using both Lighthouse and a Chrome User Experience Report. So it's essentially an audit that gives us all the information that we would need.

And lab data is the one Lighthouse score is calculated from. This is why you shouldn't focus that much on improving this part. It's just an indicator. But you could ask, okay, so what's the difference between the lab data from PageSpeed Insights and lab data that I'm getting by running the Lighthouse score on my own device? Right? So the data from PageSpeed Insights Lighthouse audit is coming from Google Datacenter. So they're basically running the test, the audit, on one of the Google datacenters instead of a computer. And the website is usually using the datacenter that is the closest one to your location. But sometimes, to my experience, much more often than sometimes, you could use another one, if the closest one is under a heavy load. And this is why I could sometimes get completely different results on the same page if you do the run one after another. They could really be completely different. And that's another reason to actually not put a lot of focus in the slide data. Let's treat that as an indicator. Also, to put a little bit more detail into that, all the tests are being run on this data center, but also the emulated Motorola Moto G4, which is a typical midrange mobile device. So as long as all your users are not using this, this score is not going to be a realistic simulation. What really matters is field data and it's collected from the last 28 days in this particular example. But I mean, in every PageSpeed Insights audit, it's collected from 28 days. So if you did an update, you will need to wait whole month to actually see if there was an improvement or not. And the lab data shows how well your website performs against colored vitals and first input, first concentration paint. The percentage of each color is actually showing how many users experience good, medium or bad results. So, for example, we see a whole green bar. That means that every user is experiencing good results.

9. Impact of Core Web Vitals on SEO

When using PageSpeed Insights, always prioritize field data over lab data. Performance has an impact on SEO, especially for mobile search results. Desktop devices may be included in the future. Only field data matters for SEO optimization. Google looks at each metric separately, and a good 75th percentile results in an SEO boost. In this example, an SEO boost is achieved for First Input Delay, LCP, and CLS.

Perfect scenario. So to summarize, when you are using PageSpeed Insights, always look at the field data when looking for meaningful real-world information. And you can use lab data to check there was an improvement immediately after you made the changes. But the most important part here is lab data, period.

Now something we've all been waiting for and something that a lot of people struggle with. Also myself, I had a lot of issues finding the right information because it was changing over time. So what is the impact of Core Web Vitals and basically performance on SEO? It's a new thing that was introduced this year and the short answer is yes. Performance affects SEO but first of all right now it influences only the mobile search results and takes into account only data collected from mobile devices. So the mobile story is actually something that you should look at, I mean mobile field data. Most likely it will be a thing for desktop devices as well at some point but it's not yet. Also only the field data matters, so this is what you should optimize, the lab data it doesn't have any influence on SEO. So if you want to have an SEO boost check your field data in PageSpeed Insights and optimize that.

Let's see some real-world example and how it works in Dev. So Google is actually looking at each of the four collected metrics separately and if the 75th percentile of a given metric is good then you're getting SEO boost from this particular metric. If not you're not getting SEO boost. Just this. That's it. So in this example we will have an SEO boost from First Input Delay, LCP, and CLS and we'll not have it from FCP. That's it. And that's all I have for you today. I hope this talk helped some of you to better understand this wild world of performance measurement tooling and I really encourage you to dig a little bit deeper by yourself, because it's a super fascinating topic. Enjoy the rest of the event.

Comments