In this talk, we will discuss leveraging Machine Learning practices in Software Testing with several practical examples and a case study that I used in my project to do Bug Triage. Let's embrace the future together!

The Future is Today: Leveraging AI in Software Testing

Video Summary and Transcription

This Talk discusses integrating machine learning into software testing, exploring its use in different stages of the testing lifecycle. It highlights the importance of training data and hidden patterns in machine learning. The Talk also covers generating relevant code for test automation using machine learning, as well as the observation and outlier detection capabilities of machine learning algorithms. It emphasizes the use of machine learning in maintenance, bug management, and classifying bugs based on severity levels. The Talk concludes with the results of classification and bug management, including the use of clustering.

1. Integrating Machine Learning into Software Testing

In this session, I want to talk about integrating machine learning into our daily software testing activities. We will discuss integrating machine learning activities in different software testing stages. We will start from the first stage in the lifecycle, analyze the requirements, design test cases, implement test code, and discuss maintenance activities. We need to use machine learning to improve our activities due to the complexity of the systems we test, the need to cover different interfaces and integrations, and the time constraints and resource issues we face. Machines and robots can help us, as they already do in our daily lives.

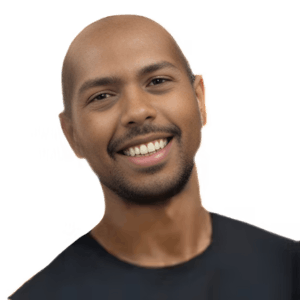

Hello, everybody! My name is Mesut Turkal. I'm a software quality assurance engineer, and in this session, I want to talk about integrating the machine learning into our daily software testing activities.

Machine learning is nowadays a very hot topic, everyone is talking about it, everyone is trying to get advantage to improve their efficiency. So, how about quality assurance activities, right? We can also improve our efficiency, maybe we can reduce some manual tasks, and we can somehow get advantage of using machine learning in our activities in different stages.

So, this is what we will do. We will discuss integrating, or leveraging machine learning activities in different software testing stages. We will start from the first stage in the lifecycle, which is even analyzing the requirements and designing some test cases, and then we will discuss how we can implement test code as well, because test automation is very important, and eventually we will discuss some maintenance activities by the help of machine learning activities. So, this is what we will go through.

First of all, let's have a check about the background and why we need to improve our activities by the help of machine learning. And in the second part of the presentation, this is probably the most important part in which we will go through all stages in the software testing lifecycle, and eventually in the last part I will share one practical example where I tried to use machine learning in my personal project and I will explain what I did and I will share some results. So let's get started with the first part, the introduction part and the needs or the background of using machine learning in software testing.

We need to use machine learning to improve or to support our activities because we have several challenges. Software testing is not easy anymore. The applications the systems that we are testing are too complex, too complicated. We have several interfaces or interactions and the applications that we are testing are talking to, communicating to different applications on different platforms, right? So we have to test or cover different interfaces or integrations. So this means we have a comprehensive scope to test and of course we have a time constraint. Time is precious. It's very valuable. And if our test cases are slowing down the pipelines, then after sometime it won't be acceptable, right? Because the developers or the product managers will start complaining about the test cases, slowly running test cases, because we want to fix our failures or the issues as soon as possible. We want to because this is one of the best deliveries, one of the quality dimensions on top of the quality context.

But to support fast delivery, we have to cope in time. We have to be quickly adapting our solutions. We have to quickly covering a lot of scope and integrations. So we have time constraints, scope resources, of course, budget is all resource issues, mean, cost and the budget issues. So from lots of different dimensions, we have several challenges. So it looks a little bit difficult and tough to cope with this challenge. But maybe we can find some help. We can get some help from someone. And could machines or robots be someone who can help us? Actually, they can because even in our daily routines, daily life, we can see in several different situations, they are already helping us. Even if we are watching over some social media or reading some articles, we are seeing they are recommending us.

2. Machine Learning in Software Testing

In this part, we discuss how machine learning works and its working principle in software testing. We explore the use of machine learning tools, such as natural language processing algorithms, in software testing practices. We also highlight the importance of training data and how hidden patterns are revealed to generate a model for predicting future reactions or results. Additionally, we draw parallels between machine learning and biological learning, emphasizing the need for learning and observation in testing. Finally, we examine the software testing life cycle and the stages involved, from analyzing requirements to executing test cases and performing maintenance.

Like even if I read one article, I see that I might be interested in some more similar articles. And most of the time, it's very accurate, right? How does it work? How do they know me? Like, what kind of scope or context I might be interested in? Because they watch me, they observe me, they already know previously which articles I visited, previously which context I was interested in. And similarly, the prediction would be, what kind of similar scope I could be interested in? So, there is a pattern underlying my actions and when this hidden pattern is revealed after the observation, which is called as learning in machine learning, then of course the future actions would be predicted easily. So this is the working principle of machine learning, right?

So similarly, it can work in our software testing activities as well. For example, when I check what kind of tools are using only the machine learning, those tools which are performing software testing performance, even this query I can make with the help of machine learning. Nowadays, of course all of us know there are several natural language processing algorithms or protocols we can communicate with. So after sending our queries we can find several accurate answers. So not only the NLP protocols or algorithms, but lots of several algorithms we can use in our software testing practices and we will see how fast, how reliable they are working. And one more time let's quickly remind or remember how a dimensional algorithms work. Of course the training data is very important to predict some accurate results because if they don't learn us very well then maybe the results they are generating might not be that accurate or the expected results. So after having enough and accurate consistent data, then after observing this data the hidden patterns are revealed and a model is generated so whenever an upcoming data is coming then this model can generate what, how or in what ways this input data will be reacted. The system will react in what way. This reaction or the result can be predicted by the model and this will be our result and whenever we complete our model we can evaluate the performance and if we are convinced with the performance or satisfied with the performance of the model then we can go and just deploy in production but otherwise we can continuously try to improve by changing by the parameters, by doing some fine tuning activities, by playing with the the optionals or the parameters of the model. So it looks very similar to our biological learning because I tried to give some examples previously in the previous slides, like how we learn some things. Like first of all we observe, we try to learn the whole system how it works and then for the upcoming situations we try to predict what might be the related reactions or the results. For example as a human tester, if you ask me to test your system my answer would be most probably okay if I have resource or bandwidth, of course I can test your system, but first of all please teach me, please let me learn your system, like even if you yourself do not teach me I will learn it by some exploratory activities or by learning the documents going through the materials, I will do the learning, I will observe, I will do some exploratory actions, I will click the buttons, I will maybe navigate to different pages and I will observe, I will see how the system will react to my actions and then eventually after I complete my learning then I can guess what can be the results. For example whenever I see the APIs are working in a secure way then most probably I can guess if I send a request with an unauthenticated tokens or credentials then most probably I will have a 403 response code. This is my prediction right, because I already learned, I already observed the system is working with a secure way. This will work in the same way with the machines and let's see how it works in our software testing life cycles or the stages. This is a very usual life cycle we follow. Of course it's not working in a waterfall way or manner anymore nowadays. It is working like an iterational way, in an agile way. But basically this is how it works, even through the iterations. We start with analyzing the requirements, understanding the features, and then we design some test cases to cover those requirements or the features. Then whenever we design our test cases, we have to execute them. And we can execute either in manual ways or automated ways. So if we are doing the automated testing we should the test code. This is the implementation which is a part of the environment setup stage. After the execution, test case execution is the next after environment setup. And eventually, after the execution, we close our testing activity by doing the maintenance.

3. Leveraging Machine Learning in Test Case Lifecycle

If we have any issues in the test case itself, we can improve the test code. Otherwise, if we find any issues with the product, then we can raise the bugs or the tickets. So, in each stage, we can somehow leverage machine learning activities. Let's start discussing each stage one by one, starting with analyzing the requirements and then relevantly, generating some test cases. An ISBN number is a combination of different numbers in a different format. After I observe this, I can generate some other test cases by injecting some different values. But of course, there is a much more straightforward way to do that nowadays, by using the NLP protocols or the algorithms.

If we have any issues in the test case itself, we can improve the test code. Otherwise, if we find any issues with the product, then we can raise the bugs or the tickets. This is the last stage of one single test case life cycle.

So, in each stage, we can somehow leverage machine learning activities. Let's start discussing each stage one by one, starting with analyzing the requirements and then relevantly, generating some test cases. Again, after observing, after doing our learning, after doing our training, then we can generate some test cases.

For example, let me immediately go through an example, which might be an API that we will test. The API of the Application Protocol Interface of a library. For example, we have a library and inside we have several books. Each book entity has some different attributes, like the ISBN number, the price or the publish year of book. Whenever I send some queries, I will get the relevant response. After I get these responses, I can see what kind of values are representing these attributes.

An ISBN number is a combination of different numbers in a different format. First of all, we have a digit and then we have the hyphen character and then three more digits and another hyphen character. This is one format designed to represent the ISBN number. This is already one training, already one learning. After I observe this, I can generate some other test cases by injecting some different values. Not this exact value but some similar values and even some intentionally wrong values. For example, if I start with two digits, what happens? Because it will be violating the standard defined for this ISBN number. It will be generating negative test cases as well, which we generate intentionally, injecting some unexpected values. So, this is all the generations we can do after learning, after seeing and doing our observations. But of course, there is a much more straightforward way to do that nowadays, by using the NLP protocols or the algorithms. So, we can just send our query.

For example, in this example, I'm sharing on the slides. I'm just explaining my problem, like, I am a tester, I have to design some test cases and my use scenario is something like this, users are going to this web page, and then performing some queries by sending some keywords to the text fields on the web page. So, this is my scenario. Please define some test cases for me, and I can see it already generates maybe six, seven test cases, including positive scenarios, corner cases, and lots of different coverage points. After we design our test cases, the next stage is generating the code, implementing the test code, which can be again performed by using NLP. On this example, I am sharing on this slide. Now, I am explaining my problem, the test steps.

4. Generating Relevant Code for Test Automation

In this part, we discuss generating relevant code for test automation using machine learning. We explore the use of different programming languages, libraries, and frameworks, such as Python with Selenium, Cypress, Playwrights, and Selenium. The generated code is similar to what we would implement manually. Machine learning helps automate the code generation process and saves time and effort.

Step number one, go to this page, navigate to this URL. Step number two, find, locate this element, and do the user interaction, like click the button or maybe type some keywords into the text fields, and then I can see the relevant code is generated. This one was, I guess, Python with some Selenium and some other libraries. Not only specific programming languages or the libraries, but also I can ask to generate the relevant code in some test automation frameworks. For example, in this example, I'm asking to generate the code with a similar scenario, not the same but a similar scenario, in Cypress. But I can ask for different frameworks as well, like Playwrights, or Selenium, or any other. So again, I can see that it is generating a code which is very similar to what I would implement. Because if I did not ask, most probably I would implement very similar code.

5. Code Generation and Test Execution

Now, in terms of code generation, I'm following a different path. I start with a Postman query and convert it to different programming languages. Implementation of test cases in UI automation requires visual recognition of elements. Instead of using traditional attributes, we can visually recognize elements. We can train the code to match visually using similar icons. After implementation, we can execute the test case and collect metrics such as execution duration.

Now, in the third example, in terms of code generation, this time I'm following a different path. I'm starting with a Postman query, and I ask it to convert this Postman query to different programming languages, like, not only Python, but JavaScript, and some others as well. So first, I implement my Postman query, and I copy the curl value, and fully automated, right? I don't waste any time. Everything is very fast, very accurate, very reliable. The code which is generated eventually is something that I can directly inject into my test automation environment.

Moving on, so talking about implementation of test cases, when we are doing the UI automation, visual recognition of elements is much more important, right? Because we have to locate the elements on the web pages, and how we do in the UI automation terminologies, we have to locate the elements. Actually, the code should locate the elements. Normally, how we do is we use the traditional attributes of the elements, or maybe the path, or the classes, the IDs of the elements, but we all know that they are sometimes flaky. They might be changed by the development team. They can just change the layout of the page, and sometimes the locators are broken. So, what should we do? Maybe we should, instead of using those traditional attributes or the classes, the paths, why don't we try to visually recognize, as just we do as human beings. For example, whenever we are scanning the web page, by our eyes, we are trying to see where the elements are, right? Where are the buttons? On the left-most corner, or in the middle of the page, where is it? We can see it by our eyes. So, the same way, we can let the code try to match visually. Like, if we train it by several similar icons. For example, on this page, I'm sharing an open repository. Since it's open, I'm just sharing without any commercial concerns. You can just go and check. There are several icons. For example, in this case, it was online shopping cart. If you are testing an online website, then you can train in this way. And whenever you have a similar icon, not necessarily 100% same, but a similar one, it will be recognized as a shopping cart. And whenever you click it, it can be recognized there, the location. And the code can click on the recognized element. And we can continue with the rest of the test steps.

Next, after we complete our implementation, then we can execute the test case. And what we can do during the test execution, we can collect several metrics. For example, the execution duration. How much time do we need to execute to complete our test execution? For example, let's say it is 15 seconds per test case. And this is the observation.

6. Observation and Outlier Detection

Machine learning algorithms can detect outliers in execution times, automatically notifying us of risky or unexpected situations. By analyzing observations and comparing them to previous results, we can identify potential issues and investigate further.

And this is the observation. This is the training, right? And whenever we have a new execution, if it doesn't take 15 seconds, but two minutes, for example, not 15 seconds, not 20 seconds, but two minutes, it's obviously an outlier, right? So in this case, machine learning algorithm can warn me, can modify me. My expected result was around 15 seconds, because this was my previous observation. But this time, it took two minutes. Please go and check if there is something wrong. There might be some responses which came late by the system, or they might be something else. So all these kind of observations can be done automatically. And instead of going through all the executions, one by one, we can be automatically notified about the risky, about the execution, which might have some outliers or the unexpected situations.

7. Maintenance, Bug Management, and Machine Learning

In the maintenance stage, we can teach machines to do code reviews and identify anti-patterns. Self-failing test cases can help identify root causes and provide fix options. Migrating between frameworks can be achieved through model training. A case study on bug management will be shared, focusing on classifying bugs based on severity levels. Pre-processing and feature extraction are key steps in machine learning. Converting text into numbers allows computers to understand the data. Classification is the next stage, followed by collecting results.

And the final stage is the maintenance. And refactoring is one activity, one option that we can do in the maintenance stage. For example, reviewing the code, or improving refactoring the code. So if we can teach somehow machines to do code reviews, like if we teach them the root practices, or even the anti patterns that we have to avoid, then they can do the code reviews. For example, whenever I have a hardly coded magical number inside the code, and if I teach machines that it's not an anti-pattern I want to avoid, then it can, whenever it detects, it can notify me, I found the anti-pattern here, please go and check. It will fasten our processes because otherwise what we could do, we could do just peer reviews, and it would take some time, right, I would send my code to a teammate, and he or she will check the code, provide the feedback. I will check again, it will take some time, it will go back and forth, but in this way we can already complete or even do the first review by the help of machines. And self-failing is another option we can perform, which is like whenever a test case is failing, we can try to understand the root cause automatically, and it will provide some options to make the fix to avoid this failure. And migrate, I discussed it in one of the previous slides, like migrating from Cypress to Playwright or converting from one framework to another framework, those all can be achieved by training our models. And eventually, the last part will be a case study where I will share my personal experiences, it is related to bug management and I will explain how we can even manage our bugs of tickets in our projects by using machine learning. So, in this project where I applied machine learning, I had almost 900 tickets in our issue tracking system. And first of all, I exported these tickets from the project management tool to an Excel spreadsheet, and I had three different severity levels. Severity 1, 2, 3, right? And those are different criticality, or the priority levels. So, what I'm trying to do is to classify those bugs into different severity levels. So, what I did for this purpose is, first of all, I did the pre-processing, like cleaning the data. If I need any text in the strings in the descriptions that are raised inside the tickets, then I remove them, eliminate them. Or I do some formations, like remove the punctuation, or make all the words lowercase. So, these kind of formations I do. And then I do the feature extraction. What is feature extraction? As we all know in machine learning, normal string, the English description I write to describe my problem, to describe my bug, cannot be understood by machines, right? Because this is our language as humans. But if we want to talk, not to another human, but to a PC, then I don't have to use, or I should use some other languages, not English. And it is the machine language. And how does it work? I have to convert these strings, these text values, into some numbers, basically zeros and ones. This is what a computer understands. So, how I can do this conversion is, I can follow different approaches. But what I did in the specific case was just counting the number of frequencies of words in each sentence. And then I will have the ones and zeros. For example, if a specific word is happening two times, three times in a sentence, then the binary form of this number would be my feature for this sample. And after having the features, the next stage is doing the classification itself. And eventually, we can collect the results from these models.

8. Results of Classification and Bug Management

In the first cycle, I had a confusion matrix with around 73% accuracy. I merged class 2 and 3 to avoid bias, as class 2 was important to detect. I collected all other classes into one. The resulting accuracy was 82%, but still not sufficient for all activities. We can also use clustering to manage bugs.

So let me share some results. In the first cycle, this is the confusion matrix I had. And I had around 73% accuracy. And then I tried to collect class 2 and 3 together, and the labels for that was 3 and 4. I merged those two just to avoid bias, because class 2 is the class where most of the bugs are labeled with. So I combined 3 and 4. Which means because class 2 for me was important to detect. If I have a class 2 issue ticket, then it was a release blocker for me, for deciding if class 2 or not is an important decision. So I collect all the others in one class. And in this case, I had 82% maybe still not 100% sufficient to leverage in my activities because it was a difficult problem. Even for ourselves, as humans, sometimes we are discussing a lot about the bug triage, right? For me, sometimes it's 72. For someone else, it's a 73 or 4. So on top of classification, we can do the clustering as well. And we can manage our bugs.

Check out more articles and videos

We constantly think of articles and videos that might spark Git people interest / skill us up or help building a stellar career

Workshops on related topic

In this three-hour workshop we’ll introduce React Testing Library along with a mental model for how to think about designing your component tests. This mental model will help you see how to test each bit of logic, whether or not to mock dependencies, and will help improve the design of your components. You’ll walk away with the tools, techniques, and principles you need to implement low-cost, high-value component tests.

Table of contents- The different kinds of React application tests, and where component tests fit in- A mental model for thinking about the inputs and outputs of the components you test- Options for selecting DOM elements to verify and interact with them- The value of mocks and why they shouldn’t be avoided- The challenges with asynchrony in RTL tests and how to handle them

Prerequisites- Familiarity with building applications with React- Basic experience writing automated tests with Jest or another unit testing framework- You do not need any experience with React Testing Library- Machine setup: Node LTS, Yarn

Tests rely on many conditions and are considered to be slow and flaky. On the other hand - end-to-end tests can give the greatest confidence that your app is working. And if done right - can become an amazing tool for boosting developer velocity.

Detox is a gray-box end-to-end testing framework for mobile apps. Developed by Wix to solve the problem of slowness and flakiness and used by React Native itself as its E2E testing tool.

Join me on this workshop to learn how to make your mobile end-to-end tests with Detox rock.

Prerequisites- iOS/Android: MacOS Catalina or newer- Android only: Linux- Install before the workshop

1. Welcome to Postman- Explaining the Postman User Interface (UI)2. Workspace and Collections Collaboration- Understanding Workspaces and their role in collaboration- Exploring the concept of Collections for organizing and executing API requests3. Introduction to API Testing- Covering the basics of API testing and its significance4. Variable Management- Managing environment, global, and collection variables- Utilizing scripting snippets for dynamic data5. Building Testing Workflows- Creating effective testing workflows for comprehensive testing- Utilizing the Collection Runner for test execution- Introduction to Postbot for automated testing6. Advanced Testing- Contract Testing for ensuring API contracts- Using Mock Servers for effective testing- Maximizing productivity with Collection/Workspace templates- Integration Testing and Regression Testing strategies7. Automation with Postman- Leveraging the Postman CLI for automation- Scheduled Runs for regular testing- Integrating Postman into CI/CD pipelines8. Performance Testing- Demonstrating performance testing capabilities (showing the desktop client)- Synchronizing tests with VS Code for streamlined development9. Exploring Advanced Features - Working with Multiple Protocols: GraphQL, gRPC, and more

Join us for this workshop to unlock the full potential of Postman for API testing, streamline your testing processes, and enhance the quality and reliability of your software. Whether you're a beginner or an experienced tester, this workshop will equip you with the skills needed to excel in API testing with Postman.

In the workshop they'll be a mix of presentation and hands on exercises to cover topics including:

- GPT fundamentals- Pitfalls of LLMs- Prompt engineering best practices and techniques- Using the playground effectively- Installing and configuring the OpenAI SDK- Approaches to working with the API and prompt management- Implementing the API to build an AI powered customer facing application- Fine tuning and embeddings- Emerging best practice on LLMOps